Bringing Complex 3D Models Into Frame

The creation and use of 3D models has been skyrocketing. This was a trend that started well before the recent metaverse hype, with more companies creating sophisticated 3D models of their products to display online, and more artists leveraging increasingly powerful, accessible tools like Blender for the creation of 3D assets.

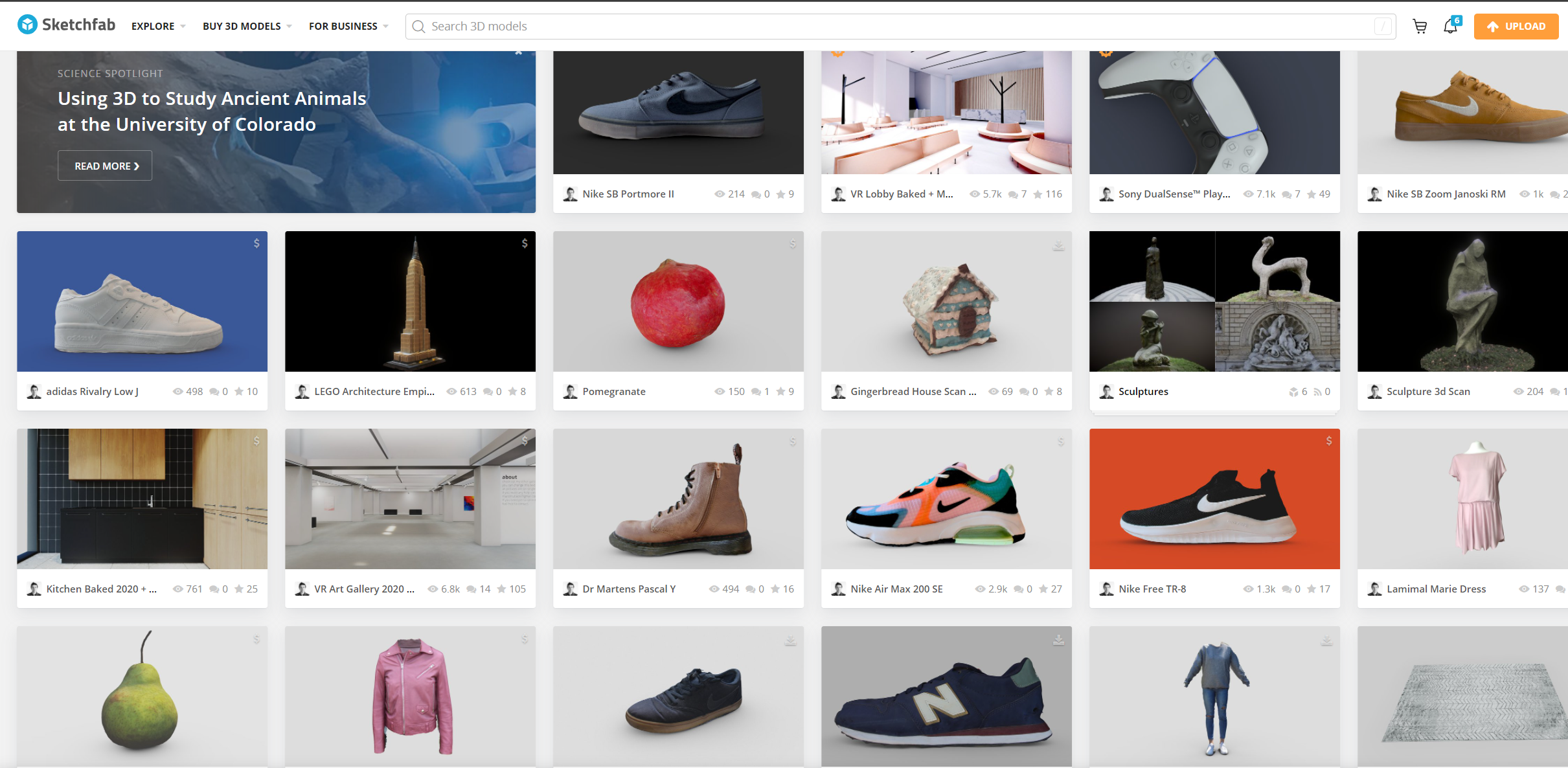

3D models are essential building blocks of many video games, digital art of all sorts, VR experiences, and even websites themselves. The success of 3D model libraries and marketplaces like Sketchfab, which was recently acquired by Epic Games, attests to the growing importance of and market for 3D assets.

Places like Frame and other destinations in the metaverse provide a natural way to explore and examine 3D models with other people. You get to see the model in a spatial context, walk around it, see from what angle other people are looking at it, or even hop into VR for a fully immersive look. It's pretty tough to have that kind of shared, immersive experience with people and 3D models from traditional video conferencing tools.

3D models can be really large, though, with incredibly complex geometry (the "poly count"), and/or super crisp, high-resolution textures. This isn't always an issue, but when you're trying to render them inside a website in a real-time, collaborative context from as many devices as you can, these heavy models can be troublesome.

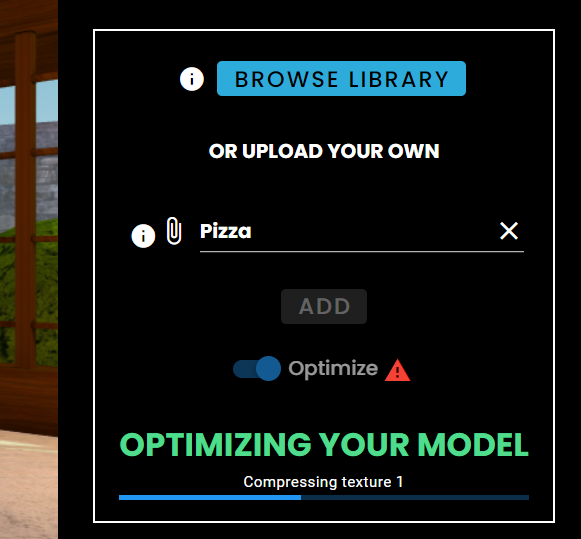

Models generated by photogrammetry, the process of creating a 3D model from photographs, are notoriously heavy and "unoptimized", with complex geometry and massive textures. Check out this one, a 3D model of a slice of pizza generated with photogrammetry:

Very cool - but this isn't a small model. We are looking at some juicy textures and almost 150K triangles in the geometry of the mesh.

Because Frame runs right from the browser, we have to be careful with how much we try to render in the same scene. You'll notice that if you flood a Frame with tons of assets, eventually it will start to lag more, and may not even load on mobile devices or VR. This is why we put a limit on the size of the assets you can bring into Frame, and for 3D models we set that limit at 15MB (for now).

Although it would take me only a second to eat that particular slice of pizza, that model file is so large that under normal circumstances one wouldn't be able to bring it into Frame.

We put some thought into how we could let users bring some of these heavy, complex models into Frame without sacrificing too much speed and performance, and we ended up with a pipeline that applies optimizations to 3D models when they are imported, before we load them into Frame. These optimizations simplify the geometry of the mesh via mesh compression (this one, specifically), but also compress the textures via Basis Universal texture compression. Frame teammate Will Murphy orchestrated the pieces of this pipeline puzzle.

Because these techniques can be computationally intensive, we run them in a separate worker thread, a thread of processing in the web browser that can happen "in the background" without lagging out the main processes of an application. This way, while it can still take some time for the optimization to happen and the model to upload, at least Frame won't be lagging in the meantime.

Before too long, boom! The model appears in Frame and I can walk around it, check it out with others, and even apply a fun spin animation to accelerate my hunger.

While it's tempting to just explore all of the pizza models, for uh, research, let's not stop there.

I wanted to put our model optimization pipeline to the test with a model that is bigger and more complex. So, I tried out a photogrammetry scan of a location at Pompeii. The model imported, I flipped on fly mode, and next thing I knew I was swooping around this model with a colleague talking about how mind-blowing this is.

It's important to note that our pipeline won't be able to handle all models. Those that are incredibly big still won't be able to get crunched down below our file size limit. If our optimizer degrades how your model looks, you can also try uploading without it.

In the future, we'll be applying this same model optimization pipeline to 3D environments that you upload to Frame. I expect there are a few other improvements we'll be bringing to it as well, to help you bring even more complex 3D models right into Frame for real-time, collaborative exploration.

The metaverse needs models. Happy importing!

You can get started with Frame for free at learn.framevr.io